The NCHS Annual Meeting provided for many productive discussions about the impact of counterterrorism measures on humanitarian efforts.

This paper analyses the diffusion and strategic value of satellite technology in humanitarian contexts.

This PRIO blog examines why children’s education is a relevant concern for the Security Council and why education is a question of security.

The Culture House in Oslo is screening ‘Mama’, a film about Afghan refugees in Norway, followed by a panel discussion featuring NCHS Director, Antonio De Lauri.

International law scholars have published a series of essays examining legal and policy issues relevant to the current and future pandemics and migrant rights.

The International Humanitarian Studies Association Humanitarian Studies Conference is underway! Here is where you can see our NCHS members.

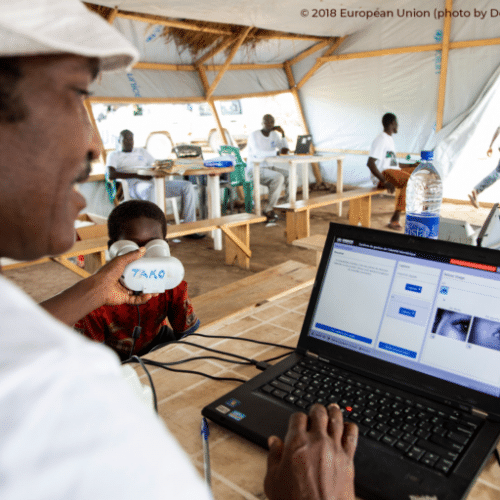

NGOs and UN agencies have collected personal data of millions of people in the global South. A new ICSC blog explores if this has been done with due prudence?

Join this seminar on 3 November hosted by the Institute for Social Research to launch the report “Vulnerable protection seekers in Norway: Regulations, Practices and Challenges”.

Workshop set to bring together academics to examine the humanitarian impacts of Europe’s current approach to immigration.

You can now view a recording of our webinar on the Afghan protection paradox emerging from the recent Taliban takeover in Afghanistan.

In the summer of 2023, as the UN convened to discuss generative AI, there were calls for an ethical and responsible governance framework to deal with risk and harm. In the humanitarian sector, it has been suggested that generative AI could help with content, participation, funding, administration, and stakeholder mapping but also that this technology may be ‘the next AK-47.’ Regardless of which position one adopts, generative AI has significant implications for humanitarian governance.

This is the third of a series of blog posts where we examine emergent discussions on generative AI in the humanitarian space. The first blog discussed the framing of policy conversations around AI in humanitarian strategies. The second took stock of conversations around the broader implications of generative AI for aid work and workers. This blog unpacks the humanitarian accountability issue for generative AI.

The struggle for humanitarian accountability has received significant policy focus and institutional investment in recent decades. Whereas the sector understands the need to be accountable to the people it is supposed to serve, accountability efforts are often geared upward to donors. The digital transformation of aid compounds these challenges by way of privacy issues, surveillance, cyber security problems, and mis/disinformation. Currently, generative AI is widely perceived as difficult to govern if not ‘ungovernable.’ What does this mean for the quest for humanitarian accountability? In this blog, we put forward a set of pointers for reflection.

To interrogate the relationship between humanitarian accountability and generative AI, we need to incorporate three separate accountability discussions, each with its own dynamics and tensions.

First, while accountability for uses of AI has become a prominent policy issue, generative AI further widens the gap between policy and practice. In global and regional governance forums, a lot of attention is given to accountability challenges surrounding the governance of AI and recently of generative AI. At the heart of these discussions are concerns about transparency, sustainability, bias, discrimination, singularity and the role and place of human oversight. In this context, the AI Principles of the OECD define accountability as follows:

Accountability refers to the expectation that organisations or individuals will ensure the proper functioning, throughout their lifecycle, of the AI systems that they design, develop, operate or deploy, in accordance with their roles and applicable regulatory frameworks, and for demonstrating this through their actions and decision-making process (for example, by providing documentation on key decisions throughout the AI system lifecycle or conducting or allowing auditing where justified).

While such control and transparency were already complicated for previous forms of AI, the key difference with generative AI is that not only the analysis but the very selection of the data and presentation of the results are automated, be it as text, image, code or otherwise. This engenders new challenges with respect to traceability and foreseeable and unforeseeable harm. It is therefore an urgent question whether existing frameworks for AI risk governance and ‘responsible AI’ are applicable to generative AI or whether these have to be fundamentally adjusted. Without rigid watermarking of all AI-generated content, it is even questionable whether accountability can ever be achieved for generative AI.

Second, the continued absence of meaningful and effective accountability for humanitarian action is linked to structural factors. As noted above, the humanitarian sector grapples with the persistent challenge of combining upwards accountability to donors with participatory and meaningful accountability processes with affected communities. The humanitarian community pledges ‘to use power responsibly by taking account of, giving account to and being held to account by the people they seek to assist’. While all humanitarian agencies can do better on accountability, humanitarian accountability gaps are premised on the fundamental inequalities embedded in the global governance structure, big state power politics, transnational corporate resource grabs and democratic deficits at the national level. This gap is structural in nature and does not have a technological fix.

Third, the adoption, adaption, and innovation of digital tools have generated their own accountability dynamics. The introduction of technology has generated new problems, i.e. exacerbated risk and harm, ranging from problems of untested and experimental solutions deployed on vulnerable populations, cyber security, surveillance misinformation, and disinformation to data protection and design problems and malfunctions. At the same time, digital tools are portrayed as a way to enhance the accountability efforts of humanitarian actors: better data and analysis, better feedback, and better oversight mechanisms will enable aid providers. Capturing this development, the 2023 Operational Guidance on Data Responsibility in Humanitarian Action states that in accordance with relevant applicable rules, humanitarian organisations have an obligation to accept responsibility and be accountable for their data management activities. Together, these developments also suggest a shift in the problem framing towards one where accountability problems are made amenable to digital interventions and digital solutions. In the next section, we look more specifically at an emergent set of approaches to humanitarian accountability for the use of AI.

In response to widespread humanitarian disenchantment with non-binding and non-enforceable ethics norms, there is a strong focus on the need for legal accountability for digital tools. Legal accountability is both the most straightforward and hardest part of this puzzle. It is straightforward because it turns accountability into clear rules and procedures of compliance, but it is also the hardest because of the relative absence of such legislation at present. Here we map out bits and parts of what we colloquially call the turn-to-law narrative:

Existing law is insufficient, but the development of new law is too slow. At present, there is a flurry of activity with respect to developing and adopting new hard laws to tackle AI. These instruments – old and new – offer more or less principled approaches to accountability. The use of generative AI to analyse and synthesise enormous amounts of data may raise privacy concerns, as sensitive information about individuals could be inadvertently exposed or misused. Data protection legislation like the EU GDPR offers a tangible but partial starting point for thinking about the responsibility for and legality of generative AI in this domain. Most prominently, the upcoming AI Act of the European Union may require a minimum level of accountability as a precondition for applying risky AI systems at all, and this may set a precedent elsewhere. Similarly, the proposal for the EU AI liability directive, criticised for being ‘half-hearted’, deals with liability for certain kinds of damages produced by AI systems. Yet, perpetually outpaced by technological developments and subjected to intense lobbying by BigTech, the path towards a shared conception of the legal problems and solutions to generative AI is demanding. Additionally, there are several questions pertaining specifically to the sector:

Can humanitarians overcome their aversion to regulation? While humanitarians might like more regulation of technology, in the sector, legal procedures, legal compliance and the lawyers trying to oversee it have a bad name. Humanitarians are skeptical about regulation, law, courts, and lawyers not related to international humanitarian law (IHL). As recently noted by Meg Sattler, given the precarious nature of humanitarian work,

it is no surprise that humanitarians have shied away from regulatory processes… But the absence of regulation sees us perpetuate the myth of humanitarianism as altruism when it is actually self-monitored against non-enforceable standards for which nobody is held accountable.

Instead, there is a plethora of so-called ‘ethical’ frameworks like the above cited IASC Guidance that define principles that are maintained by agencies themselves and weighed against their other principles, interests, and concerns.

Can generative AI in emergency contexts even be legally regulated? Even with humanitarians overcoming their dislike of law, the problem for generative AI in these settings is that it is hard to say where a legal alternative to such ethical guidance should start and end. The modes and implications of such systems are so evasive and intricate that finding the right balance between the risks and benefits through generic legislation is enormously challenging. Moreover, when operating in countries in crisis and war, with domestic partners and through locally lead initiatives, the legal regulation of AI is no immediate guarantee of humanitarian accountability.

Can – and should – IHL be updated to regulate generative AI in aid? This question is part of what has by now almost become a parallel track to the hype cycle, where practitioners and academics ask whether IHL is adequate for dealing with drones, autonomous weapons, cyber-attacks – and AI – and call for a ‘digital Geneva Convention’ by Big Tech actors. As IHL is focused on armed combat and the use of violence, and only to a limited degree regulates the everyday practice of humanitarian action, resorting to IHL does not provide a pathway to accountability.

Should humanitarians return to rights-based approaches? Recently, several commentators have suggested that the humanitarian community should revisit the concept of rights-based approaches and anchor normative approaches to generative AI in existing human rights instruments. This entails rethinking approaches to innovation, acknowledging that the use of AI is deeply local in its impact and that empowerment and participation remain crucial.

The need to reframe the problem: Our last point concerns the need for a reframing of what generative AI is actually doing with the humanitarian sector, beyond individual applications. Generative AI is not ungovernable, but it is mischaracterised. We argue for an understanding of the use of generative AI in aid as a form of humanitarian experimentation.

Acknowledging the fundamental problem with generative AI: The adoption of appropriate laws, policies and investment in oversight mechanisms and capacity building is necessary to govern experimental technologies. It is well known that when employing novel solutions in humanitarian emergencies with outcomes that are hard to predict or control, implementation is challenging. However, with accusations that generative AI is essentially developed by way of stolen data, amounting to ‘the largest and most consequential theft in human history’, the conversation can no longer only be about providing better and more effective governance of experimental practices. The aggregate impact of generative AI in the humanitarian sector will be to transform the modes of humanitarian governance beyond its ‘digital tools.’ This means shifting the focus from thinking about accountability for governing experimental technologies to accountability for experimental humanitarian governance.

It is still early days for humanitarian generative AI. As practitioners, policymakers, and academics – thinking and working together – we need to learn more and to critically revisit the means and ends of humanitarian accountability. We hope the broad, conceptual analysis provided here can be a useful contribution. In the end, the most fundamental impact of generative AI on humanitarian agencies will stem from its effects on the societies in which the agencies operate. Humanitarians need to determine what they can and should take responsibility for and try to tackle, and what is beyond their remit. Misinformation, polarisation, and authoritarian control were already central impediments to humanitarian action as well as sources of humanitarian problems before AI entered the stage.

To think responsibly about generative AI, we need to shift our problem framing. In the end, the argument of this blog boils down to the following: the adoption and adaptation of generative AI represents a comprehensive and unprecedented mainstreaming of humanitarian experimentation across the aid sector. Humanitarians must understand and own this accountability problem.