The situation in Afghanistan changes by the minute. In this blog post, we want to call attention to a largely overlooked issue: protection of Afghan refugees or other Afghans who have been registered biometrically by humanitarian or military agencies. Having collected biometrics from various parts of the Afghan population, for different purposes and with different technical approaches, recent events teach us a vital lesson: both the humanitarian and the military approach come with significant risks and unintended consequences.

Afghanistan, UNHCR and biometrics: risks of wrongfully denying refugees assistance

As embassies in Afghanistan are being evacuated and employees of international humanitarian agencies wonder how much longer they will be able to work, contingency plans are drawn up: Will there be population movements, will there be camps for IDPs in Afghanistan or for refugees across borders? How will they be registered? How will they be housed? Contingency planning will help save lives.

Future planning must learn from experiences of the past. In the case of Afghanistan those are dire. More than forty years ago, on Christmas Eve in 1979, the Soviet Army invaded the country. Afghans began fleeing and sought refuge across nearby borders. Numbers swelled progressively. A decade later there were more than five million refugees in Pakistan and Iran. The departure of Soviet troops was followed by continued civil war and the reign of the Taliban from 1996 to 2001.

A US-led coalition of Western powers dislodged the Taliban regime after the 9/11 attacks in New York. This was the starting point for the international community to invest in the return of Afghan refugees to their home country. The UN Refugee Agency (UNHCR) was tasked with organizing the return and found itself facing several challenges, including limited financial and technical capacities, and problems linked to the sheer number of persons to be repatriated. While UNHCR started to develop and operate large-scale automated registration systems already in the 1990s, these were not yet sufficiently advanced to deal with several million people. At the time, nobody had such systems. Registration was eventually outsourced to Pakistan’s National Database and Registration Authority (NADRA). Refugees were given Proof of Registration (PoR) cards issued by the Pakistani government. Another problem was the integrity of the voluntary return programme. Donors provided funds to UNHCR for the agency to disburse significant cash grants to Afghan refugees as incentives to return. But how could they ensure that nobody would come forward more than once to claim allowance? There was no precedent. UNHCR was charting new territory and testing new approaches.

A ‘solution’ was offered by an American tech company: Biometrics. A stand-alone system was set up. The iris patterns of every returning refugee above the age of 12 – later the age of 6 – was scanned and stored in a biometrics database. Intending to protect the privacy of these individuals, each refugee’s iris image was stored anonymously. The belief was that the novel biometric system would comply with data privacy standards if the iris images were stored anonymously. The system operates ‘one to many matches’, meaning that one iris image is matched against the numerous iris images stored in the database to search for a potential match. This means that a returning refugee would only receive a cash grant if their iris could not be found in UNHCR’s new biometric database. If the iris was found in the database, this was taken to mean that the person had already received a cash grant earlier and repatriation assistance was thus denied. Millions of Afghans received their allowances, and left images of their iris in UNHCR’s biometric database. Today, the total number of images in this database stands at well above four million.

Fifteen years after its introduction it was discovered that while this novel system was designed with good intentions of providing privacy protection to iris-registered returnees, it had unintentionally opened a Pandora’s box: automated decision-making without any possibility of recourse. If the biometric recognition system would produce a false positive match (i.e., mistakenly matching the iris scan of a new returnee with one already registered in UNHCR’s database) – which statistically is possible, even likely – there was no way this returnee could prove that the machine’s false match was in fact wrong. Since all scans are stored anonymously, a person cannot prove that the iris in UNHCR’s database belongs to someone else, even though that is a likely scenario for a system being tested on an unprecedented scale. Likewise, no UNHCR staff can overturn the decision of the machine. Thus, by default, the machine is always ‘right’. This logic risks turning the intended aim of privacy protection into a problem, namely denying assistance to returnees who rightfully claim repatriation cash grants from UNHCR.

This system has never been replicated elsewhere. UNHCR has modernized its global registration systems in recent years and continues to use iris scanning and other biometric identifiers. In its current system, each image is linked to a person and can be checked in case of doubt. Such system designs are, however, not unproblematic either.

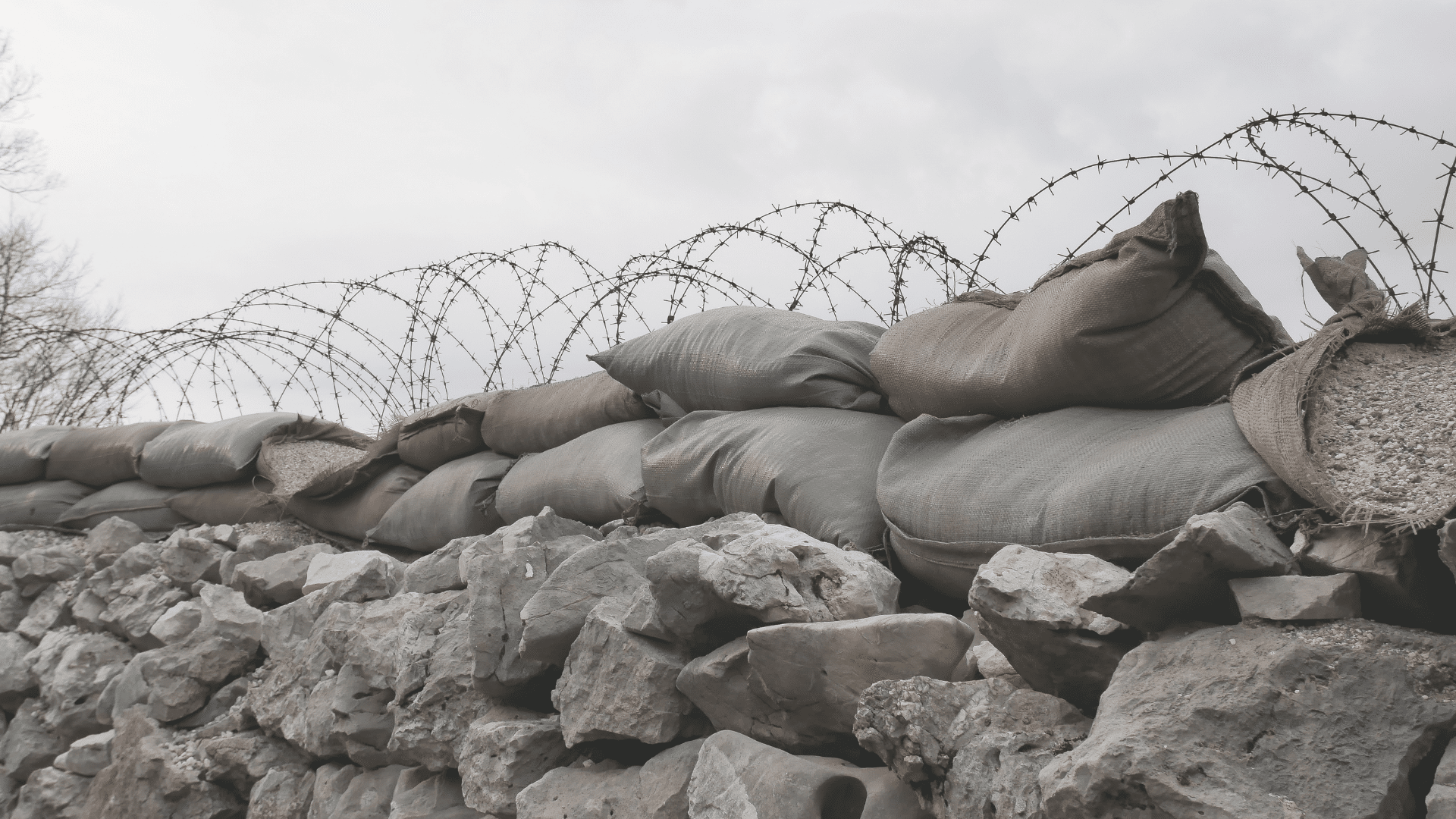

US military and identifiable biometrics in the hands of the Taliban: risk of reprisals

As embassies in Afghanistan are being evacuated, not only are many vulnerable individuals left behind, but also biometric identification devices have been left. Indeed, not only UNHCR but also the US military has been collecting biometrics, though from very different parts of the Afghan population. This includes biometric data (i) from Afghans who have worked with coalition forces and (ii) from individuals encountered ‘in the field’. In both cases biometrics were for example used by the US military to check the identity of these individuals against biometrics stored in the US DoD’s Biometric-Enabled Watchlist containing biometrics from wanted terrorists, among others. As news have circulated about the Taliban getting their hands on biometric collection and identification devices left behind, and on the sensitive biometric data that these devices contain, an assessment of the situation, the risks, and the lessons is called for. What will the Taliban do with this data and with these devices? Will they for example use it to check whether an individual has collaborated with coalition forces?

What will the Taliban do with this data and with these devices?

If that is the case, it could have detrimental repercussions for anyone identified biometrically by the Taliban. The Taliban regime of course cannot check the iris scans and fingerprints of all individuals throughout Afghanistan. Yet, as we have seen in many other contexts, including humanitarian access, biometric checks could be introduced by the Taliban when Afghans for example cross a checkpoint moving from one region to another, or request access to hospitals or other government assistance. Would someone then decide not to go to hospital in fear of being identified by the Taliban as a friend of their coalition enemy? Or, as Welton Chang, chief technology officer at Human Rights First noted, the biometric databases and equipment in Afghanistan that the Taliban now likely have access to, could also be used “to create a new class structure – job applicants would have their bio-data compared to the database, and jobs could be denied on the basis of having connections to the former government or security forces.”

There are many worst case-scenarios to think through and to do our utmost to avoid, and there are many actors who should see this as a call to revise their approaches to the collection and storing of biometric data. Besides the two examples above, it can for example also be added that as part of its migration management projects worldwide, IOM has in recent years supported the Population Registration Department within the Ministry of Interior Affairs (MoIA) in the digitalization of paper-based ID cards (“Tazkiras”). The main objective of the project is the acceleration of the identity verification process and to establish an identity verification platform. Once operational, the platform can be accessed by external government entities dependent on identity verification for provision of services. Since 2018, more than two million Afghan citizens were issued a Tazkira smartcard which is linked to a biometric database. The IOM project also supports the Document Examination Laboratory under the Criminal Investigation Department of MoIA in upgrading their systems and knowledge base on document examination.

What should be done? Access denied or data deleted

While this blogpost cannot possibly allude to all the various cases that involve biometrics in Afghanistan, it seems that a diverse range of actors, that all have collected biometric data from Afghans over the past 20 years, need to undertake an urgent risk assessment, ideally in a collective and collaborative manner. On that basis a realistic mitigation plan should be developed. How can access for example be denied or data deleted?

We do not know what will happen next in Afghanistan. Should the situation develop in a way that will see a new wave of refugees into Pakistan, UNHCR’s stand-alone iris system loses its relevance because the new refugees could well be those four million who returned during the past 19 years, and whose biometric data UNHCR has already processed once before and keeps in its database. In such a scenario, the database would serve no purpose and preparations should be made to destroy it in line with the Right to be Forgotten. Indeed, there is consensus among many human and digital rights specialists that individuals have the right to have private information removed from Internet searches and other directories and databases under certain circumstances. The concept of the Right to be Forgotten has been put into practice in several jurisdictions, including the EU. Biometric data is considered a special category of particularly sensitive data whether it is stored anonymously or not. As opposed to ID cards and passports, a biometric identity cannot be erased: you will always carry your fingerprint and iris. In fact, the main legal basis for the processing of sensitive personal data is the explicit informed consent of the concerned individual.

neither anonymized nor unanonymized biometric data provide easy technological solutions

Another lesson for future reference should be the understanding that neither anonymized nor unanonymized biometric data provide easy technological solutions. None of the above approaches can be replicated in future war or interventions without serious reconsideration, including questions about whether and why the data is needed and careful attention to whether it should be deleted? Hence, this is the moment for UNHCR, as the global protection agency, to review and showcase its learnings from this project. It is time to show respect for the digital rights of those who have certainly never consented that their biometric data be maintained in a database beyond the point of usefulness.

One advantage of seeing this humanitarian biometric system in parallel with US military use, and other uses of biometrics in Afghanistan, is that together these examples powerfully illustrate some of the many challenges confronting the at times stubborn belief in biometrics as a solution, making challenges visible from many different ’user’ perspectives. Anonymous data is not a solution (as per the UNHCR example), nor is unanonymized data (as per the US military example). What should we do then? What do both ‘failures’ mean for how to think about the use of biometrics in future interventions, humanitarian and military.

Having stored this data for almost two decades, and now concluding that this effort was potentially not just useless, but more seriously risked producing additional insecurity – e.g. to Afghans wrongfully denied humanitarian assistance – should signpost the need to reconsider the taken-for-granted assumption that the more biometrics are collected from refugees the better. This should be a starting point to review the risks of identification of data traceable to individuals and that of anonymous data. So far we have paid attention to refugee digital bodies and digital dead bodies – but what about abandoned digital bodies?